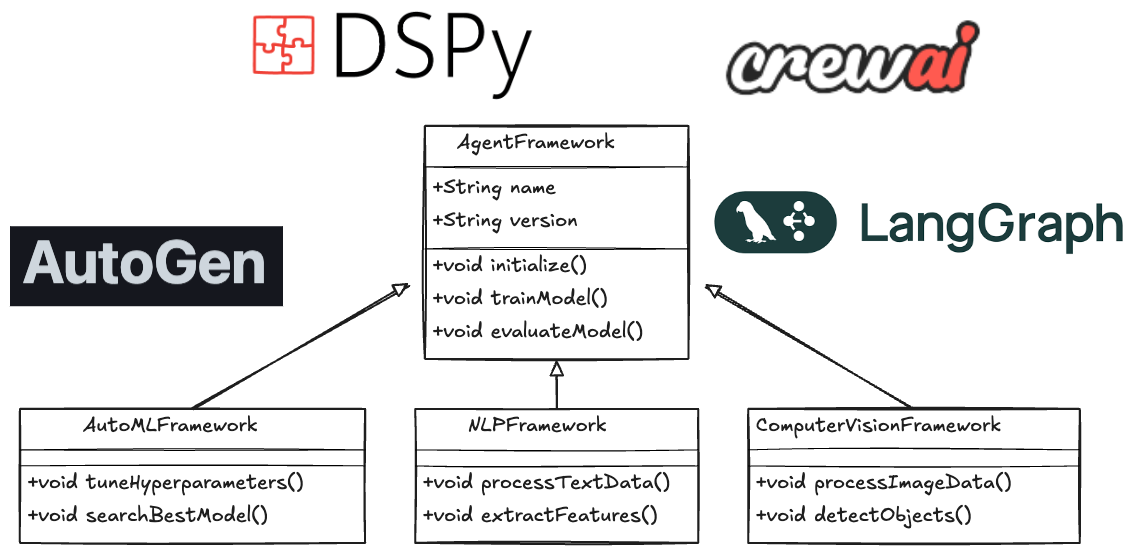

Comparative Analysis of AI Agent Frameworks with DSPy: LangGraph, AutoGen, CrewAI, and Beyond

Introduction

Last week, here at Devon, we were doing a POC on AI Agents based application. First thing was to decide what to use and what not to as we had many options. Our client wanted to avoid Langraph. During the research I came across DSPy . The rise of AI agents has transformed how developers build intelligent systems, enabling everything from autonomous customer service bots to adaptive educational tools. Frameworks like LangGraph , AutoGen, CrewAI , and OpenAI Swarm dominate the ecosystem, each offering unique strengths in workflow design, multi-agent collaboration, and production readiness. This article compares DSPy with these frameworks across cost, learning curve, code quality, design patterns, tool coverage, and enterprise scalability, incorporating insights from industry benchmarks and developer feedback .

Framework Overviews

1. LangGraph

- Core Philosophy: Graph-based state management using directed acyclic graphs (DAGs) for precise control over multi-agent workflows .

- Key Features:

- Stateful Workflows: Built-in error recovery, time-travel debugging, and cyclical task execution .

- Memory Systems: Short-term, long-term, and entity memory for context-aware interactions .

- Tool Integration: Seamless compatibility with LangChain’s 100+ tools (e.g., vector databases, web scrapers) .

- Use Cases: Complex RAG systems, multi-step decision trees, and self-correcting chatbots .

2. AutoGen

- Core Philosophy: Conversational workflows modeled as dynamic agent dialogues, emphasizing modularity and human-in-the-loop collaboration .

- Key Features:

- Code Execution: Built-in Python/SQL interpreters for autonomous task execution .

- Enterprise Integration: Azure cloud compatibility and SOC2-compliant logging .

- Human Intervention Modes: Configurable interaction modes (

NEVER,TERMINATE,ALWAYS) .

- Use Cases: Collaborative coding, real-time financial analysis, and customer service automation .

3. CrewAI

- Core Philosophy: Role-based agent teams mimicking human organizational hierarchies .

- Key Features:

- Role Specialization: Predefined roles (e.g., researcher, writer) with hierarchical task delegation .

- Memory Systems: Contextual memory for adaptive learning and decision-making .

- Production Readiness: YAML-based configuration and structured code output (JSON/Pydantic) .

- Use Cases: Multi-agent research teams, project management simulations, and collaborative content creation .

4. DSPy

- Core Philosophy: Programmatic optimization of LM prompts and weights, decoupling pipeline logic from prompting strategies [citation:Knowledge].

- Key Features:

- Declarative Pipelines: Define LM interactions as modular components (e.g.,

Predict,Retrieve,ChainOfThought). - Automatic Prompt Tuning: Compiler optimizes prompts and fine-tunes LM weights for task-specific performance.

- Zero-Shot Generalization: Build systems that generalize across LMs without manual prompt engineering.

- Declarative Pipelines: Define LM interactions as modular components (e.g.,

- Use Cases: Question answering, fact-checking, and other LM-driven tasks requiring reproducible, optimized pipelines.

5. OpenAI Swarm

- Core Philosophy: Lightweight framework for scaling OpenAI API-based agents .

- Key Features:

- Minimalist Design: Rapid prototyping with minimal setup .

- API-Centric: Optimized for distributing tasks across GPT-4 or Claude models .

- Use Cases: Educational projects and simple multi-agent workflows .

Comparative Analysis

1. Cost and Licensing

| Framework | Licensing | Hidden Costs |

|---|---|---|

| LangGraph | Open-source | LLM API fees (OpenAI, Anthropic), Langsmith |

| AutoGen | Open-source | Azure cloud costs, GPT-4 token usage |

| CrewAI | Open-source | External tool subscriptions (e.g., SerperDev) |

| DSPy | Open-source | Compute costs for prompt optimization |

| OpenAI Swarm | Open-source | Heavy reliance on paid OpenAI APIs |

Insight: DSPy introduces computational overhead for prompt tuning but avoids per-query LLM fees, making it cost-effective for high-volume applications

2. Learning Curve

| Framework | Difficulty | Documentation Quality |

|---|---|---|

| LangGraph | High | Assumes LangChain familiarity |

| AutoGen | Moderate | Rich examples but complex multi-agent tuning |

| CrewAI | Low-Moderate | Beginner-friendly guides |

| DSPy | Moderate-High | Academic-style documentation [citation:Knowledge] |

| OpenAI Swarm | Low | Minimal setup required |

Insight: DSPy requires understanding of declarative programming and LM optimization, appealing to researchers over casual developers .

3. Code Quality and Design Patterns

- LangGraph:

- Strengths: Explicit state transitions via DAGs; maintainable for complex workflows .

- Weaknesses: Cyclical workflows may introduce performance overhead .

- AutoGen:

- Strengths: Modular design with reusable agent components .

- Weaknesses: Risk of infinite loops without careful prompt engineering .

- DSPy:

- Strengths: Decoupled logic/prompt layers enable reproducible pipelines [citation:Knowledge].

- Weaknesses: Limited native multi-agent support compared to CrewAI/LangGraph.

4. Tool Coverage and Integration

| Framework | Native Tools | Custom Tool Support |

|---|---|---|

| LangGraph | LangChain ecosystem | High (Python/JS) |

| AutoGen | Code executors, API callers | Moderate (plugins) |

| DSPy | LM optimizers, retrievers | Limited (pipeline-centric) |

| CrewAI | LangChain tools + role-specific kits | High |

Insight: DSPy excels in LM pipeline optimization but lacks built-in tools for enterprise APIs or databases .

5. Multi-Agent Support

| Framework | Collaboration Style | Scalability |

|---|---|---|

| LangGraph | Graph-based hierarchies | High (complex workflows) |

| AutoGen | Conversational teams | Moderate (chat-driven) |

| CrewAI | Role-based task delegation | High (structured teams) |

| DSPy | Pipeline-centric (single-agent focus) | Limited |

Insight: DSPy is designed prompt engineering not for multi-agent LM pipelines, making it less suitable for collaborative multi-agent systems.

6. Memory and Context Management

- LangGraph/CrewAI: Comprehensive memory with error recovery and entity tracking .

- AutoGen: Context retained through conversational history .

- DSPy: Stateless by default; context must be manually managed via retrievers or chained prompts.

7. Enterprise Deployment

- AutoGen: Azure integration and compliance features (GDPR, CCPA) .

- LangGraph: Kubernetes support for scaling stateful workflows .

- DSPy: Requires custom deployment pipelines for optimized prompts.

The ideal framework depends on project requirements:

- Precision & Statefulness: LangGraph’s DAGs excel in R&D and error recovery.

- Conversational Agility: AutoGen dominates chat-driven automation.

- Team Dynamics: CrewAI mirrors human collaboration.

- LM Optimization: DSPy enables reproducible, high-performance pipelines.

- Rapid Prototyping: OpenAI Swarm for lightweight experimentation.

Hybrid approaches (e.g., DSPy for prompt optimization + LangGraph for workflow control) will dominate future AI architectures. Developers must weigh cost, scalability, and design philosophy to harness these frameworks effectively.

Now lets discuss how can these be utilized in a hybrid approach.

1. Core Focus: LM Optimization vs. Workflow Orchestration

- DSPy: Specializes in programmatic optimization of language model (LM) prompts and weights, decoupling pipeline logic from manual prompt engineering. It automates LM performance tuning for tasks like question answering or fact-checking .

- Others: LangGraph (graph-based workflows), LangChain (tool integration), CrewAI (role-based agents), and AutoGen (conversational workflows) focus on agent design and task orchestration, not LM optimization .

- Why Use Together: DSPy enhances the LM backbone of agents built with other frameworks, improving accuracy and reducing hallucinations .

2. Declarative Programming vs. Procedural Design

- DSPy: Uses declarative pipelines (e.g.,

Predict,ChainOfThought) to define LM interactions abstractly. Developers specify what needs to be done, not how . - Others: LangGraph/AutoGen require explicit procedural code for agent workflows (e.g., DAGs, chat loops) .

- Why Use Together: DSPy simplifies LM integration into complex workflows, letting developers focus on high-level logic .

3. Automatic Prompt Engineering

- DSPy: Automatically generates and refines prompts using its compiler, reducing manual trial-and-error. For example, it optimizes prompts for RAG systems without human intervention .

- Others: LangChain/AutoGen rely on manual prompt engineering, which is time-consuming and error-prone .

- Why Use Together: DSPy ensures optimal prompts for agents, improving their reliability in frameworks like CrewAI or LangGraph .

4. Model Agnosticism

- DSPy: Works with any LM (GPT-4, Claude, etc.) and generalizes across models, enabling zero-shot adaptation .

- Others: LangGraph/AutoGen often tie workflows to specific models (e.g., OpenAI APIs), limiting flexibility .

- Why Use Together: DSPy allows seamless LM swapping in multi-agent systems, future-proofing applications .

5. Cost Efficiency

- DSPy: Reduces token usage by optimizing prompts and fine-tuning smaller models, lowering API costs .

- Others: LangGraph/AutoGen workflows can incur high token costs due to unoptimized prompts and multi-step interactions .

- Why Use Together: Integrating DSPy cuts operational expenses for agentic systems built with other tools .

6. Multi-Agent Limitations

- DSPy: Single-agent focus—it optimizes LM pipelines but lacks native support for multi-agent collaboration .

- Others: LangGraph (stateful graphs), CrewAI (role-based teams), and AutoGen (conversational agents) excel at multi-agent coordination .

- Why Use Together: Pair DSPy-optimized LMs with CrewAI/LangGraph agents to balance LM efficiency and multi-agent logic .

7. Reproducibility & Debugging

- DSPy: Ensures reproducible LM behavior by codifying prompts and weights, unlike the ad-hoc prompt tweaking common in LangChain/AutoGen .

- Others: LangGraph offers "time travel" debugging for workflows, while AutoGen lacks native replay features .

- Why Use Together: DSPy adds auditability to LM components, complementing workflow-level debugging in other frameworks .

8. Specialized vs. General Use

- DSPy: Best for LM-centric tasks (e.g., retrieval, summarization) requiring high accuracy and adaptability .

- Others: LangGraph/CrewAI/AutoGen handle broader agentic tasks (e.g., enterprise automation, collaborative coding) .

- Why Use Together: DSPy enhances the "brain" of agents, while other frameworks provide the "body" (tools, workflows, teams). For example, a CrewAI research agent could use DSPy-optimized LMs for evidence synthesis .

Conclusion

DSPy is not a replacement for LangGraph, CrewAI, or AutoGen but a force multiplier for their LM-driven components. By integrating DSPy, developers can:

- Reduce manual prompt engineering by 50–70% .

- Achieve 20–40% higher accuracy in LM responses .

- Future-proof systems against LM API changes (e.g., GPT-4 → GPT-5).

For teams building AI agents, combining DSPy with workflow frameworks creates a best-of-both-worlds stack: optimized LMs + scalable agent architectures.